- categories: Data Science, Technique

Definition:

Regularization is a technique used in machine learning and optimization to prevent overfitting by adding a penalty term to the loss function. It encourages simpler models by discouraging overly complex or high-magnitude parameters, improving the model’s ability to generalize to unseen data.

Types:

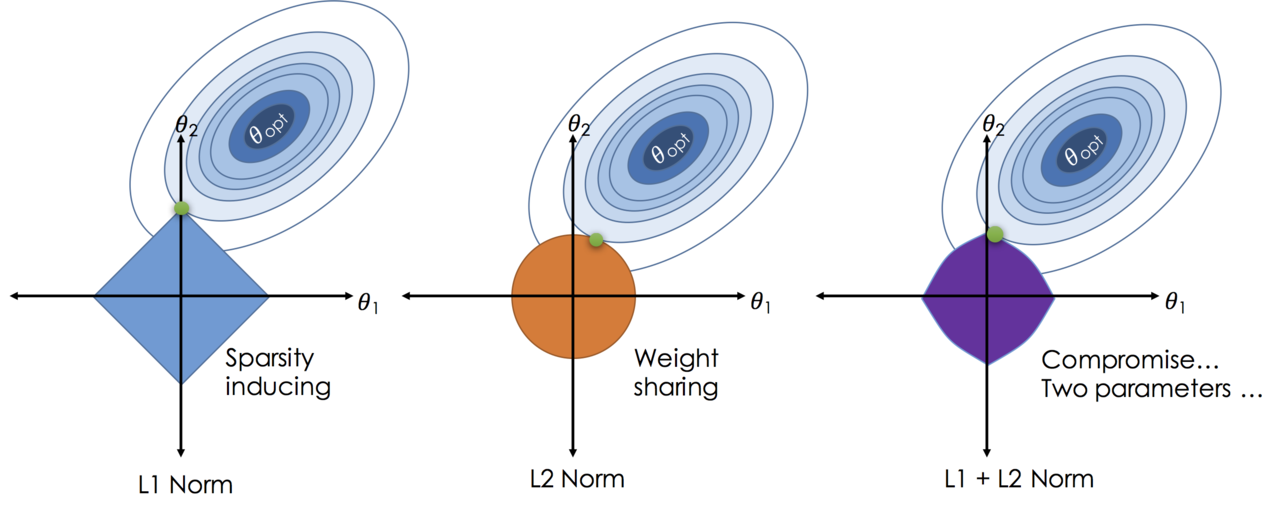

- L1

- L2

- Combined

Visualization of L1 vs L2 regularizations: