- categories: Data Science, Architecture

Definition:

Definition:

LSTM is a type of Recurrent Neural Network architecture designed to address the Vanishing and Exploding Gradient Problem encountered in standard RNNs. It introduces a memory cell and gating mechanisms that allow the network to selectively retain, forget, and update information, making it suitable for long-term dependencies in sequential data.

Introduced by Hochreiter and Schmidhuber in 1997, LSTMs are widely used in tasks like natural language processing, speech recognition, and time-series forecasting.

Architecture

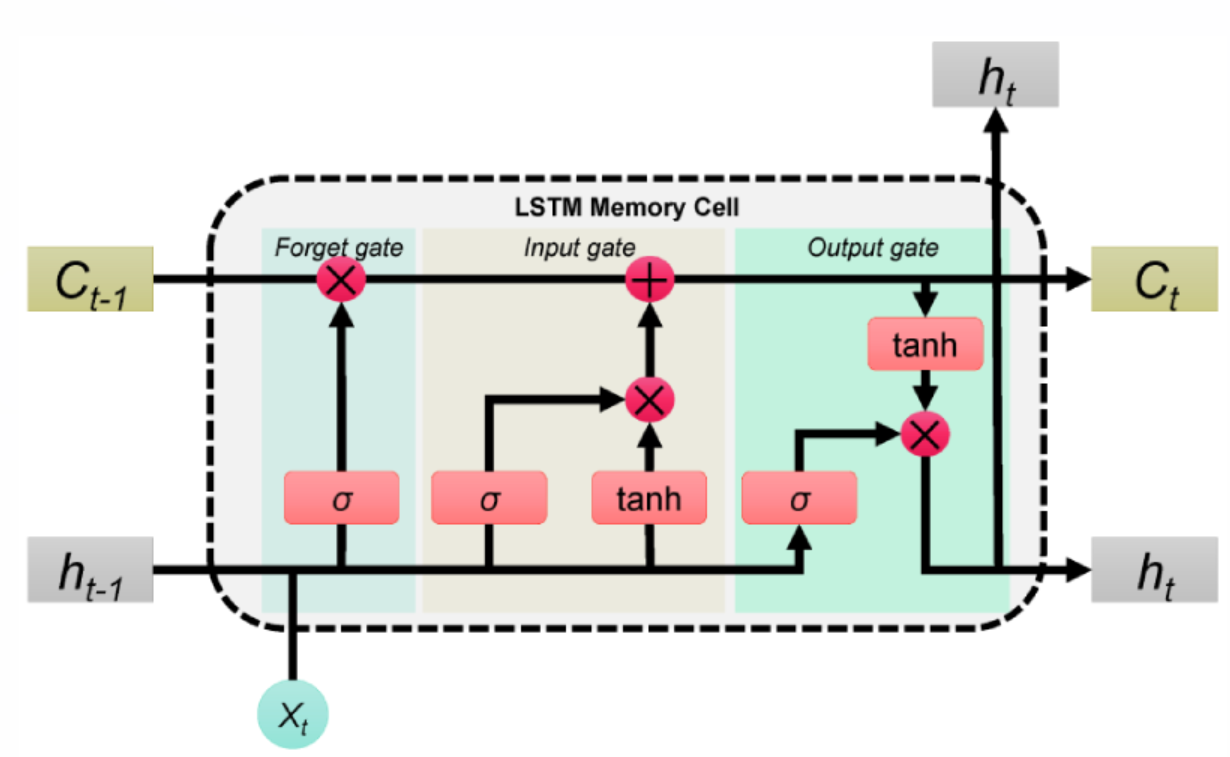

An LSTM cell consists of:

-

Cell State ():

- Represents the long-term memory of the LSTM.

- Modified by the forget, input, and output gates.

-

Hidden State ():

- Represents the short-term output of the LSTM cell.

- Passed to the next LSTM cell and used for predictions.

-

Gating Mechanisms:

- Regulate the flow of information through the LSTM cell:

- Forget gate: Decides what information to discard.

- Input gate: Decides what new information to add.

- Output gate: Decides what information to pass to the next layer.

- Regulate the flow of information through the LSTM cell:

Mathematical Formulation

Let:

- : Input at time step .

- : Previous hidden state.

- : Previous cell state.

- and : Weight matrices and biases.

1. Forget Gate:

Controls which parts of the cell state to forget:

where is the sigmoid activation function, and .

2. Input Gate:

Decides which new information to add to the cell state:

- Compute the candidate values:

- Compute the input gate activations:

3. Update Cell State:

Update the cell state with a combination of the old state and the new candidate values:

where denotes elementwise multiplication.

4. Output Gate:

Decides which information to output:

- Compute the output gate activations:

- Compute the hidden state (short-term memory):

Flow of Information

- Input: Combine (current input) and (previous hidden state).

- Forget: Discard parts of (previous cell state) based on .

- Add: Incorporate new information into the cell state based on .

- Output: Compute the output using the updated cell state and the output gate .

Key Advantages

-

Handles Long-Term Dependencies:

- The cell state allows gradients to flow over long sequences without vanishing.

-

Selective Memory:

- Gating mechanisms enable the LSTM to learn what to retain and what to forget.

-

Versatile:

- Performs well on tasks requiring sequence modeling, like language modeling, speech processing, and video analysis.

Limitations

-

Computationally Expensive:

- LSTMs are slower to train compared to simpler architectures like standard RNNs.

-

Complexity:

- The gating mechanisms and multiple states increase the number of parameters, requiring careful tuning and more data.

-

Not Always Optimal:

- In certain cases, simpler architectures like Gated Recurrent Unit (GRU) or Transformers outperform LSTMs.

Applications

-

Natural Language Processing (NLP):

- Sentiment analysis, machine translation, text generation.

-

Speech Recognition:

- Recognizing speech patterns and phonemes.

-

Time-Series Forecasting:

- Stock prices, weather prediction, energy demand.

-

Video Processing:

- Action recognition and video captioning.

Comparison with Other Architectures

| Feature | RNN | LSTM | GRU |

|---|---|---|---|

| Handles Long Sequences | Poor | Excellent | Good |

| Complexity | Low | High | Moderate |

| Training Time | Fast | Slow | Faster than LSTM |

| Parameters | Fewer | More (gates) | Fewer than LSTM |

| Usage | Simple tasks | Long-term dependencies | Long-term dependencies |

Implementation in PyTorch

import torch

import torch.nn as nn

# Define an LSTM model

class LSTMModel(nn.Module):

def __init__(self, input_size, hidden_size, num_layers, output_size):

super(LSTMModel, self).__init__()

self.lstm = nn.LSTM(input_size, hidden_size, num_layers, batch_first=True)

self.fc = nn.Linear(hidden_size, output_size)

def forward(self, x):

# LSTM layer

lstm_out, (hidden, cell) = self.lstm(x)

# Fully connected layer (use last time step output)

output = self.fc(lstm_out[:, -1, :])

return output

# Hyperparameters

input_size = 10 # Number of features

hidden_size = 20 # Number of hidden units

num_layers = 2 # Number of LSTM layers

output_size = 1 # Output dimension

# Instantiate the model

model = LSTMModel(input_size, hidden_size, num_layers, output_size)

# Example input

x = torch.randn(5, 50, input_size) # Batch of 5 sequences, each of length 50

output = model(x)

print(output.shape) # Output shape: [5, 1]